Recently I blogged about loading F1 Historical Data into Snowflake. The first time I downloaded the related .csv-files from Kaggle. In a consecutive post I loaded the data directly using the Kaggle API.

This time I am going to use the Ergast Developer API. “The Ergast Developer API is an experimental web service which provides a historical record of motor racing data for non-commercial purposes.” This database contains an API which can return data in XML, JSON, or PJSON formats. In this example we are going to work with the JSON-format.

Preparation

Before we start this example it’s good to read through some documentation. The first stop is the Ergast Developer API-website, which gives a lot of information about the different tables and how they relate to each other. Therefore it’s good to have a look at the ERD-diagram and the Ergast Database User Guide. Postman has a collection of documentation of how to work with the Ergast API.

Loading Circuits Data

The first example should be relatively easy, just loading Circuits Data. The first thing we need is the website API URL; https://ergast.com/api/f1/circuits.json. Depending on the Data you want to load, the url should be extended with the table name; https://ergast.com/api/f1.json. The Postman-documentation gives information about how to work with the Ergast API for (in this example) the Circuits.

Calling the Ergast API

Using the Postman-documentation we can find the relevent JSON-path. Then we have to specify what data we want to convert into a pandas DataFrame. So in a few steps we can load the required data into a DataFrame to then load it into Snowflake. The Ergast API prevents to load all data in one go. Therefore we have to loop through the API website until all records a retrieved. Find below how to ‘Get the Ergast API’, ‘Parse the JSON Data’, ‘Loop through the API website’, ‘Prepare the JSON Data’ and ‘Construct a DataFrame’.

I must say, I am not an Python-expert. These is part of my learning experience. There might be other, better options, which I am open to further explore. Currently I depend on my Google-skills and the ability to convert my findings Ito working examples.

Loading Data into Snowflake

The DataFrame created in the previous paragraph can be used to load the data into Snowflake. In this example we have to ‘Connect to Snowflake’, ‘Create a Database / Schema’, ‘Create a Table’ and ‘Load the Table’

Connect to Snowflake

First we have to Snowflake from Python. I created a solution with a separate file for the credentials and a reference to that file in the .py-file. Therefore I created a short example.

Create a Database / Schema

With the connection to Snowflake ready, it’s time to create a Database and a Schema. Of course this can be done from the Snowflake UI, but for the sake of this example we do it from the Python-script.

Create a Table

Next step is to create a table from a Pandas DataFrame.

Load the Table

Finally all is in place to load the data into the created Snowflake table.

A working example

Now we have seen the different components, it might be interesting to see a working example. Going back to the Circuits-table, this could look something like the below Jupyter-notebook.

I am not completely sure how to get around these messages;

“<snowflake.connector.cursor.SnowflakeCursor at 0x7fd4d5db0ee0>”

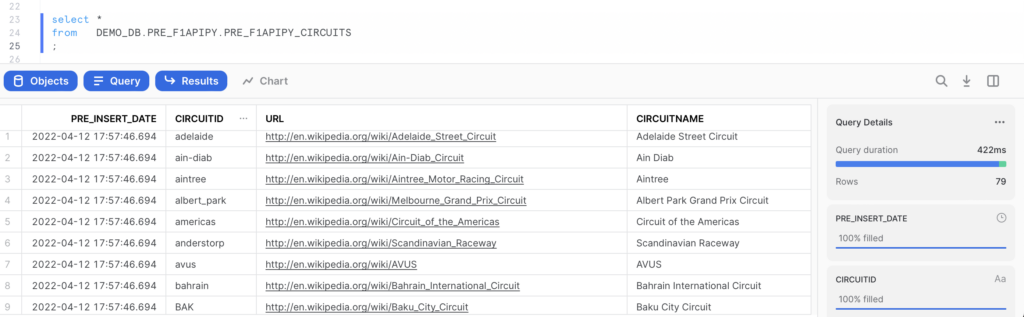

The end result in Snowflake is like expected. See below.

In this blogpost we have used the Ergast Developer API to load F1 Historical Data into a Python DataFrame and from there into Snowflake. In a follow-up post I will do something similar with the remaining tables. The code is on GitHub.

Thanks for reading and till next time.