Setup a Snowflake Sample Dataset and query data with Cortex Analyst

Last January I organized a Snowflake Cortex Analyst BUILD Hands-On Lab in Amsterdam. The Hands-On Lab was based on one the Snowflake QuickStartsSnowflake provides. A Snowflake QuickStart is a well structured tutorial you can follow along to get up and running with Snowflake. I thought it would be nice to setup an environment to see for myself how things work.

- Setup a Snowflake environment

- Generate Sample Sales data

- Generate Sample Queries with Snowflake Co Pilot

- Generate descriptions with Snowflake Cortex

- Generate Cortex Analyst Semantic Model specification

- Create Streamlit Sample Sales Chat App based on Cortex Analyst

Setup a Snowflake environment

Inside Snowflake I have created an environment where I can store the Snowflake objects, like the tables and a stage for the Semantic Model specification. The code is in GitHub.

I have created a Table, a Schema, warehouses to run queries and notebooks and a stage to store the Semantic Model specification. More details about the latter later.

Generate Sample Sales data

For this use case I decided to use Snowflake Notebooks to create the various tables and generate the data. This is just a rondom choice. There are various ways to generate your own sample data. You can e.g. use the Snowflake Synthetic Data Generator.

The Snowflake Notebook has a few steps to generate the sample data:

- Import relavant Python packages. The Faker package is used to generate the sample data

- Setting up the Snowflake connection

- Create the Data Generators per table; sales fact and stores, customers, products and time as dimensions

- Create the tables and populate data

The ipynb-file is on Github and can be imported into a Snowflake Notebook.

Generate Sample Queries with Snowflake Co Pilot

As part of a Semantic Model specification, you can add ‘verified queries’. I thought it could be interesting to try out Snowflake’s Copilot. I asked Copilot the following question: “Supply some sample queries we can ask to the data to get more insights. Please show things like possible joins, top N and comparisons between e.g. years or stores”

This generates a few sample queries, I added to the code is in GitHub.

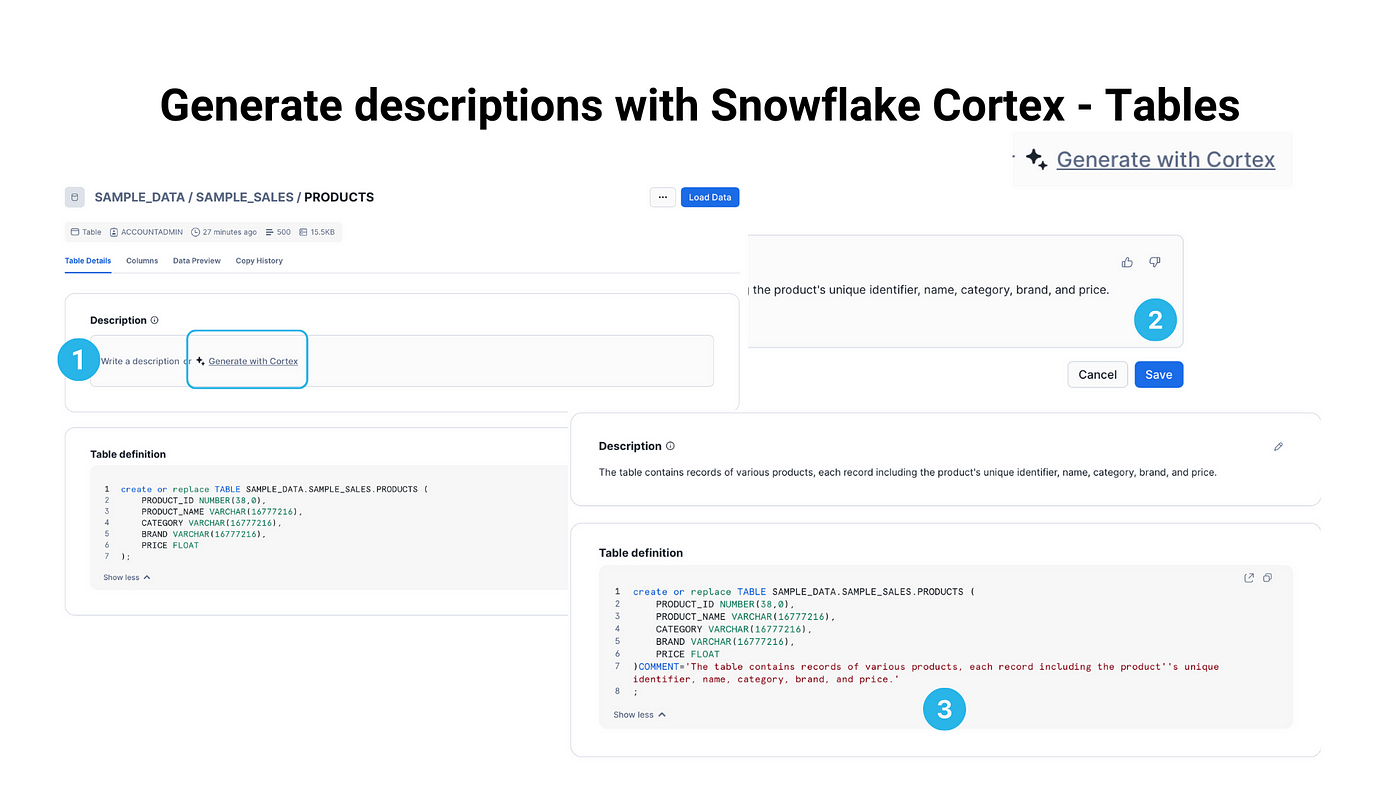

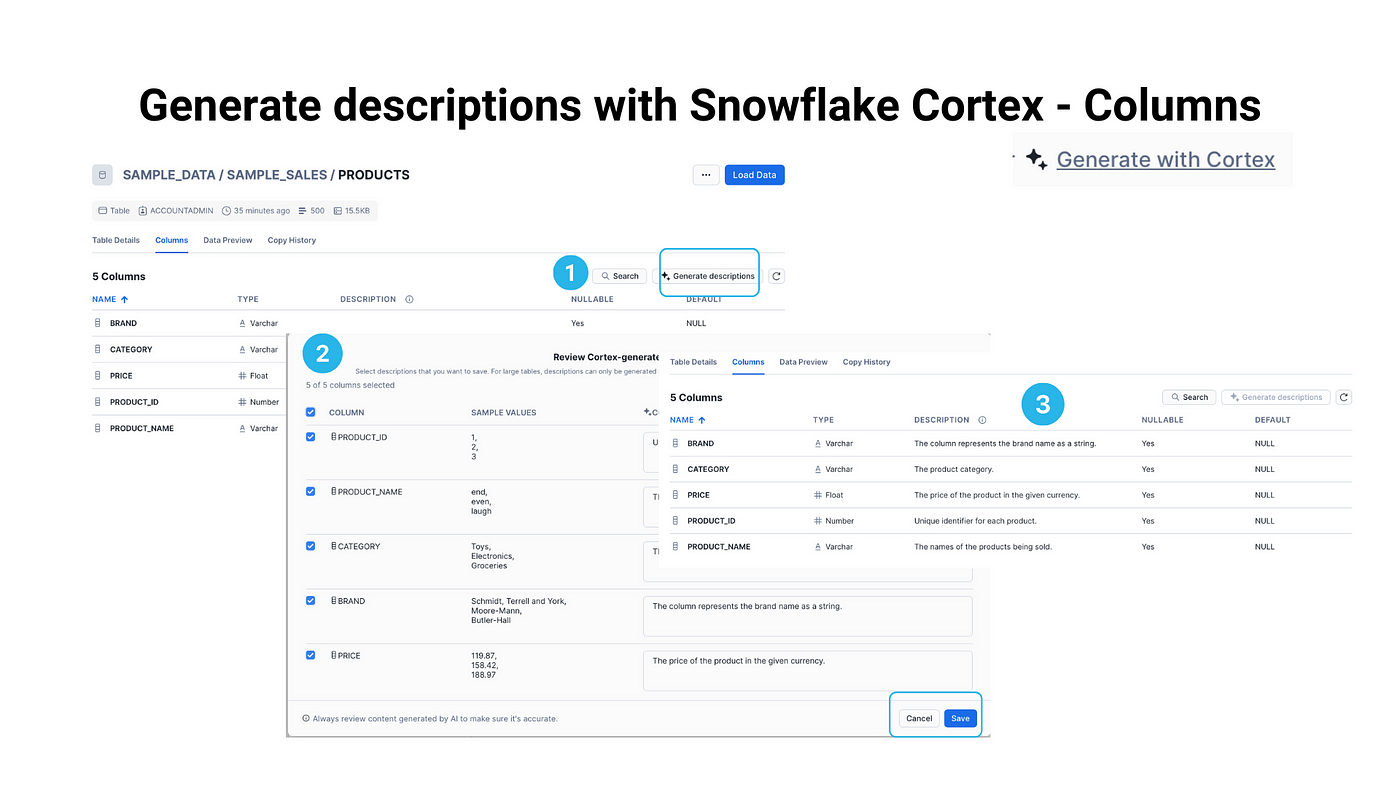

Generate descriptions with Snowflake Cortex

Another part of a Semantic Model specification are the descriptions. You can add these manually to Snowflake, but you can also Generate descriptions with Snowflake Cortex.

I generated descriptions for the Tables…..

…. and the Columns.

Generate Cortex Analyst Semantic Model specification

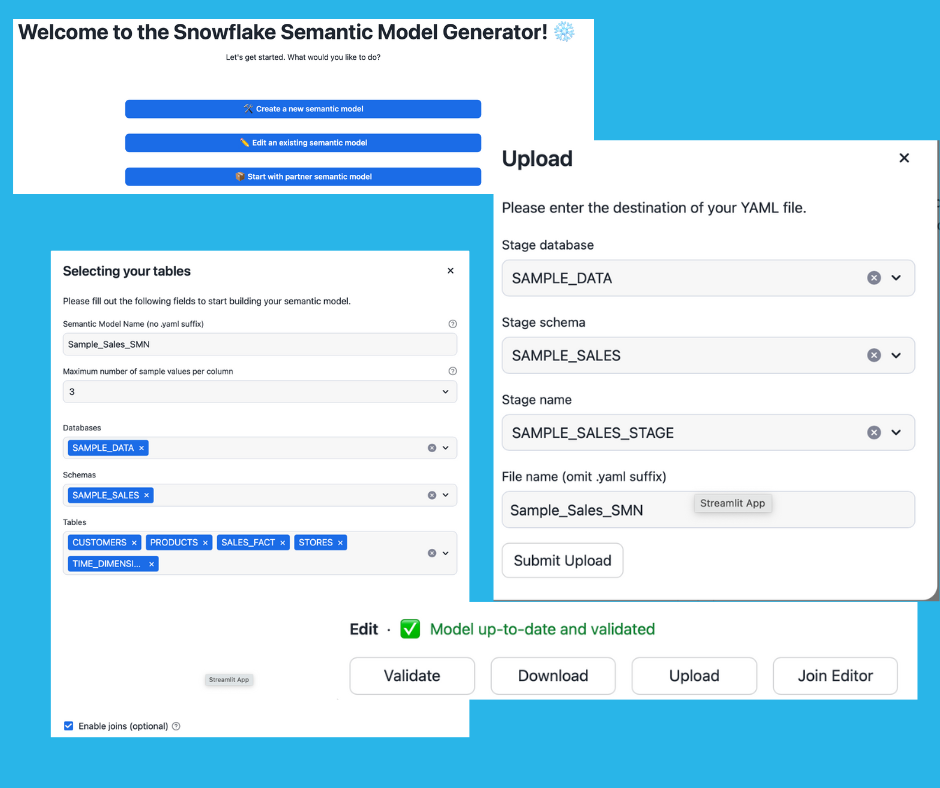

Now it’s time to generate the Semantic Model specification. Therefore I installed the Semantic Model Generator.

First I created a new Semantic Model. Working with the Semantic Model Generator is a matter of Trial & Error. I had to add Primary Keys and a verified query I generated with Snowflake Copilot earlier. Also I added the various joins to relate the Sales Fact-table to the dimensions. Next to that I had to change the Primary Key columns, because they were stored as measures in the generated Semantic Model.

If the model is up-to-date and validated, the model ca be uploaded to the Snowflake Stage. An example of the end result is on GitHub.

Create Streamlit Sample Sales Chat App based on Cortex Analyst

Finally I took the Streamlit App I used in the Snowflake Cortex Analyst BUILD Hands-On Lab. The only thing I had to change was:

- Title (optional)

- DATABASE

- SCHEMA

- STAGE

- FILE

Conclusion

In this example I showed how Cortex Analyst allows “business users to ask natural-language questions over their structured data to perform analysis and receive trusted answers to business questions.”

The whole setup serves as an example and does not necessarily resembles a production-like environment. That’s how you should look at the code in GitHub.

Till next time.

Snowflake Data Superhero and Chapter Lead for the Dutch Snowflake ❄️ User Group. Online also known as; DaAnalytics.