Data is one of the most important assets in organizations today. If you cannot trust the Data, decision making based on this Data is impossible. Measuring Data Quality is therefor essential. Inspired by the blogpost; “Data Quality Framework in Snowflake” of Divya Rajesh Surana, I decided to take it one step further by including Streamlit.

The Data Quality framework contains several checks, which will be stored in a database table and populated by a procedure. The code to create a Data Quality user, role, warehouse, database, etc. is on GitHub. The additional objects, like the table to hold the metrics and the procedure to populate the table are also on GitHub.

In the original blogpost, you can call the procedure by the below statement:

When the Procedure has run successfully, you can query the Data Quality Metrics table by issuing the below query:

Streamlit

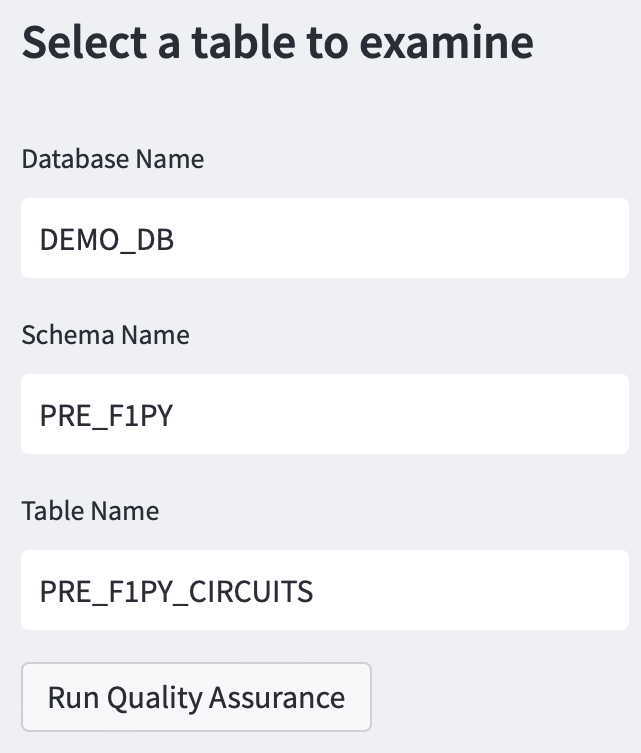

While this works fine working from Snowflake, I thought it could be also interesting to make an Streamlit app for this. The app works as follows:

- input fields to select the table (database, schema and table) you want to measure for Data Quality

- a button that calls the procedure

- the output of the query in a table

In previous Streamlit blogpost I covered connecting to Snowflake, which I will not repeat here. That’s a relatively straightforward process

Creating a sidebar with some Text inputs is easy. Call. the Streamlit-library prefixed with ‘st’. Make sure you include ‘sidebar’, to put the Text Inputs in the Sidebar.

See the code below for more details.

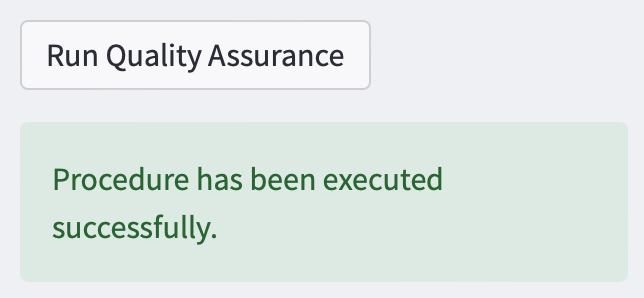

By clicking the ‘Run Quality Assurance’-button, the procedure; ‘QUALITY_ASSURANCE.QUALITY_CHECK.DATA_QUALITY’ is executed. When all is ok, a green message; ‘Procedure has been executed successfully.’ appears.

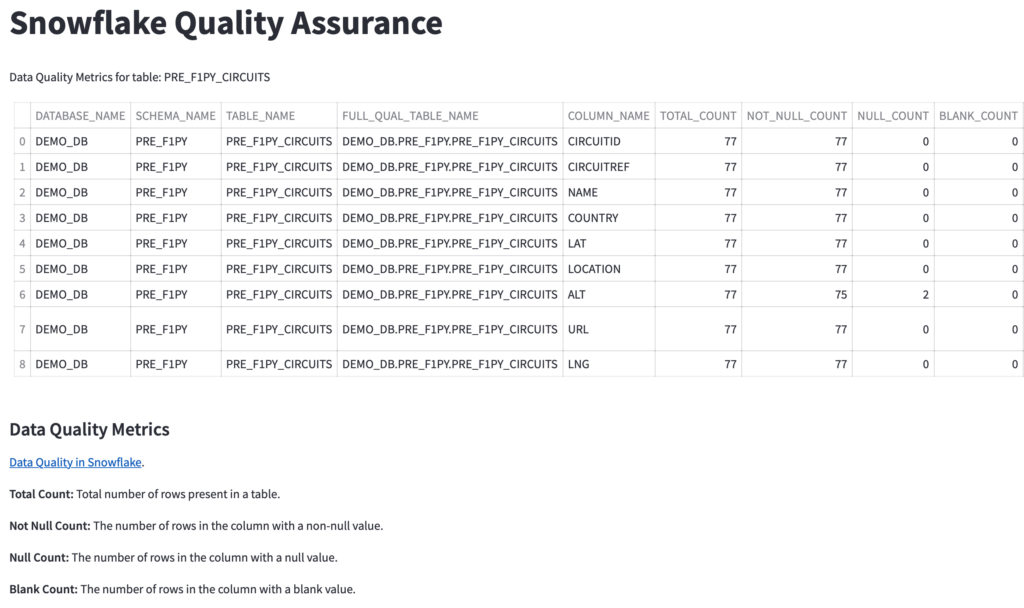

Executing the procedure results in the below output. A table with the results of querying the QUALITY_ASSURANCE.QUALITY_CHECK.DATA_QUALITY_METRICS table.

The complete code of this Streamlit Application is on GitHub. In this case I have deployed the Application in the Streamlit Cloud.

Closing Statements

This was the first solution I found online, because I was reading on Medium. I will not argue that this is the best solution. I found other solutions as well. Taking once code as head start and creating your own version out of it is easy. Building a Streamlit Application around it is even easier and worth a try. If I can do it……

Till next time.