Snowflake Summit 2023 was held from June 26-29, 2023, at the Caesars Forum Conference Center in Las Vegas. The conference featured over 500 sessions, hands-on labs, certification opportunities, and over 250 partners in Basecamp.

Snowflake Summit is the place for announcements.

“Snow Limits on Data”

The announcements can be divided in more or less three different categories:

- Snowflake as a Single Data Platform

- Deploy, distribute and monetize applications

- Programmability

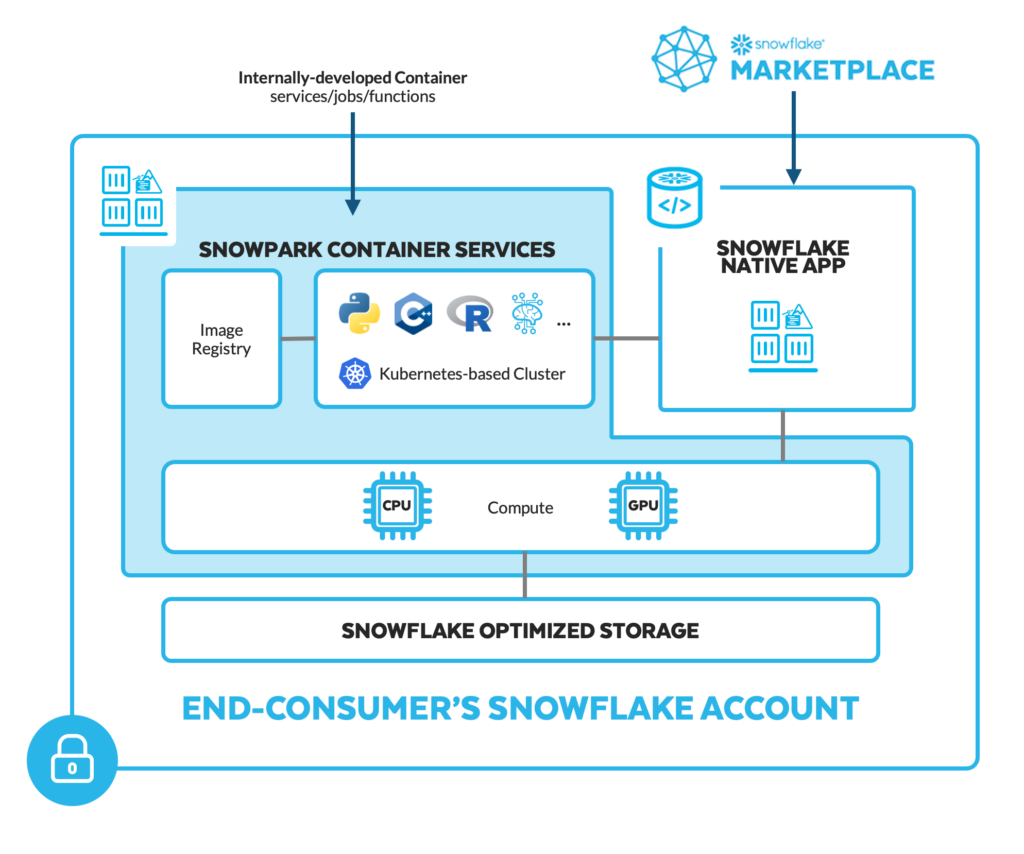

Snowpark Container Services

“Securely Deploy and run Sophisticated Generative AI and full-stack apps in Snowflake”

Snowpark Container Services enable developers to deploy, manage, and scale containerized workloads using secure Snowflake-managed infrastructure with configurable hardware options, such as GPUs.

The introduction of the Snowpark Container Services empowers the Snowflake compute infrastructure to handle various workloads, offering the capability to securely run full-stack applications, host LLMs (Large Language Model) securely, facilitate robust model training, and more. This service allows developers to register, deploy, and execute containers and services within Snowflake’s managed infrastructure.

Developers have the flexibility to build container images using their preferred programming languages, such as Python, R, C/C++, React, and any framework of their choice. These containers can be executed using configurable hardware options, including GPUs, through a partnership with Nvidia. With Snowpark, machine learning development and execution become more efficient, expanding the support for such tasks.

Snowflake aims to facilitate a seamless transition by enabling the migration of most containers from other platforms into Snowflake, acting as a lift-and-shift operation. The concept behind providing Snowpark Container Services is to allow users to build anything they desire and bring their code closer to the data within the Snowflake environment.

Read more about on the Snowflake blog.

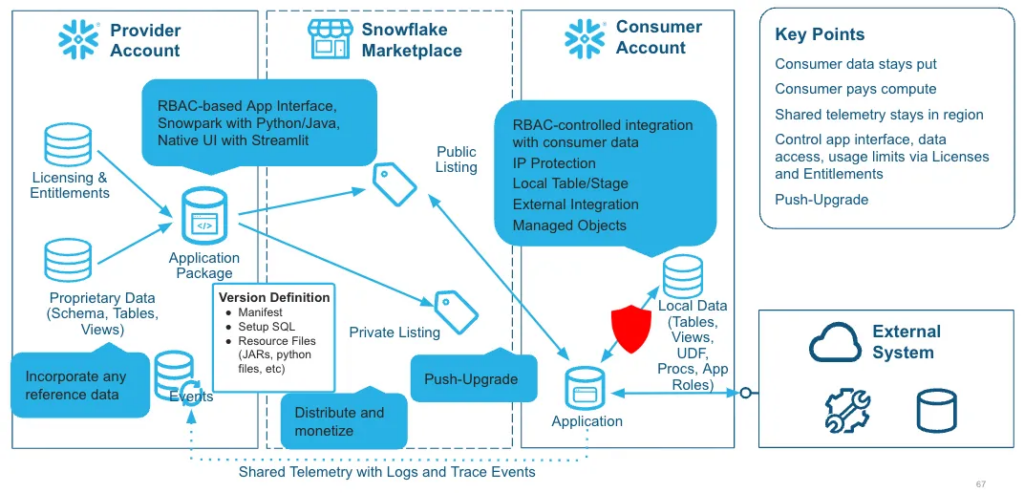

Snowflake Native Application Framework

This feature allows developers to build and deploy data-driven applications natively within Snowflake.

Snowflake’s Native Application Framework (Public Preview on AWS)) aims to empower developers by facilitating the creation, distribution, sharing, and monetization of applications. As a provider or developer, you can leverage Snowflake’s powerful features, such as Snowpark, Streamlit, Container Services, Snowpipe Streaming, and more, to develop applications. These applications can then be distributed to Snowflake’s customer base through the Snowflake Marketplace, either privately or publicly.

Furthermore, you have the freedom to monetize your applications using your preferred charging models, such as monthly subscriptions, consumption-based models, one-time fees, and others. Consumers can easily install these applications into their Snowflake accounts using their Snowflake credits, ensuring a secure process. This eliminates the need for consumers to move data or for developers/providers to manage the underlying infrastructure. It’s an efficient and hassle-free approach.

With Snowflake’s Native Application Framework there opens up a range of possibilities for building applications, including data curation and enrichment, advanced analytics, connectors for loading data from various sources into Snowflake, cost and governance management, data clean rooms, and more.

Already, numerous native apps from esteemed organizations have been published on the Snowflake Marketplace, highlighting its growing ecosystem and the value it brings. Snowflake aims to become a platform for building and deploying data-driven applications natively within Snowflake; an app store for data and applications

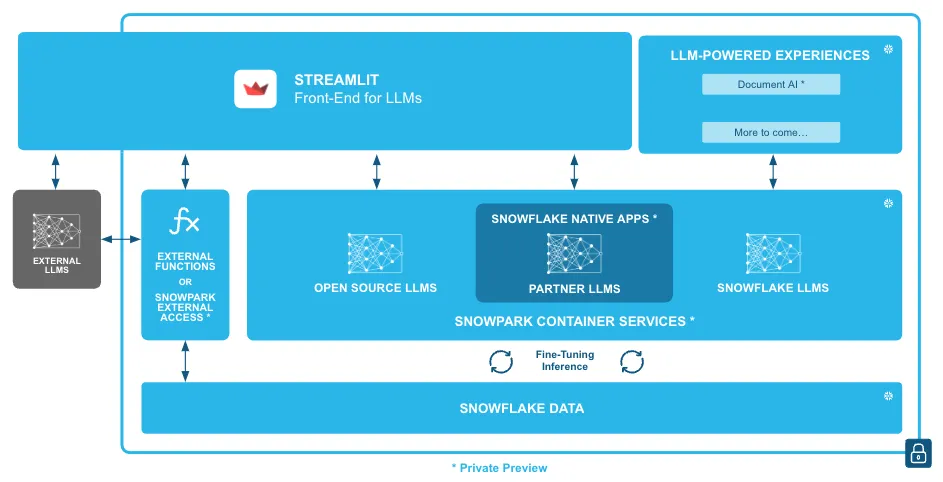

Machine Learning, LLM, and Generative AI

Snowflake provides tools for building powerful ML models at scale, managing ML data, and model pipelines and deployments in Snowflake. Next to that, Snowflake makes it easier for analysts and business users to get more insight using ML power functions.

Snowflake’s Large Language Model (LLM) incorporates Applica’s cutting-edge generative AI technology. In September 2022, Snowflake acquired Applica, an AI specialist, and has since integrated their multimodal LLM into several new products.

Snowflake announced a new feature called the Snowflake Model Registry, which is currently in Private Preview. The Snowflake Model Registry is part of Snowflake’s efforts to streamline and scale machine learning model operations (MLOps) and allows customers to store, publish, discover, and deploy machine learning models in Snowflake for inference

“Snowflake increases investment in Microsoft partnership, focusing on new product integrations with Microsoft’s Azure OpenAI, Azure ML, and more”

Document AI

One of these products, Document AI, is currently undergoing a Private Preview phase. Document AI leverages the power of AI to assist customers in extracting valuable insights from unstructured data. This integration showcases Snowflake’s commitment to leveraging innovative AI capabilities to enhance its offerings and provide valuable solutions to its customers.

In the business landscape, unstructured data in the form of documents is ubiquitous. However, extracting valuable analytical insights from these files has often been limited to machine learning (ML) experts or isolated from other data sources. Snowflake addresses this challenge by extending its native support for unstructured data and introducing the built-in Document AI, which is currently in Private Preview. This innovative solution facilitates easier comprehension and extraction of value from documents using natural language processing techniques.

Document AI harnesses the capabilities of a purpose-built, multimodal Language and Learning Model (LLM). By seamlessly integrating this model directly into the Snowflake platform, organizations can effortlessly extract pertinent information from securely stored documents in Snowflake, such as invoice amounts or contractual terms. The results can be further refined and adjusted using a user-friendly visual interface and natural language interactions.

Data engineers and developers also benefit from the inclusion of Document AI. They can programmatically leverage the built-in or fine-tuned models within pipelines using Streams and Tasks, as well as in various applications, enabling streamlined and automated inference processes.

With Snowflake’s built-in Document AI, organizations can unlock the potential of their unstructured data, empowering a broader range of users to derive actionable insights from documents and seamlessly integrate them into their analytics workflows.

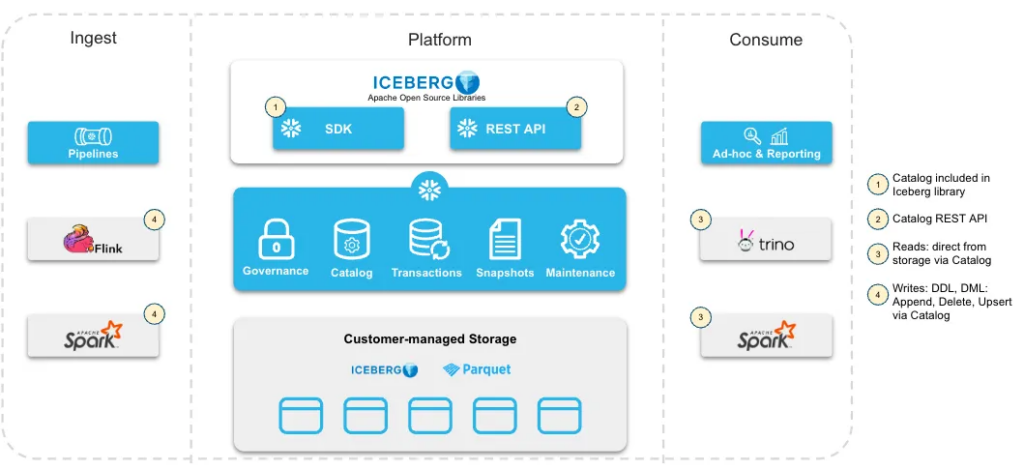

Extended Iceberg Table support

Snowflake now offers a unified table type that allows users to specify the catalog implementation (Snowflake managed or customer-owned, such as AWS Glue) for transaction metadata, while storing data externally in open formats based on the Iceberg Specification (e.g., S3, ADLS2, GCS). This approach ensures high-speed performance for both managed and unmanaged Iceberg data.

Apache Iceberg is the industry standard for open table formats and continues to grow in popularity. With Iceberg tables as first-class tables, users can leverage the full power of the Snowflake Platform, including features like governance, performance optimization, marketplace/data sharing, and more. Furthermore, Iceberg tables provide interoperability with the wider Iceberg ecosystem, enhancing compatibility and flexibility.

Managed Iceberg Tables enable seamless read/write operations within Snowflake, utilizing Snowflake as the catalog that external engines can effortlessly access. On the other hand, Unmanaged Iceberg Tables establish a connection between Snowflake and an external catalog, allowing Snowflake to read data from Iceberg Tables stored externally.

To further enhance customer convenience, Snowflake introduces an easy and cost-effective method to convert an Unmanaged Iceberg Table into a Managed Iceberg Table. This feature simplifies the onboarding process for customers, eliminating the need to rewrite entire tables.

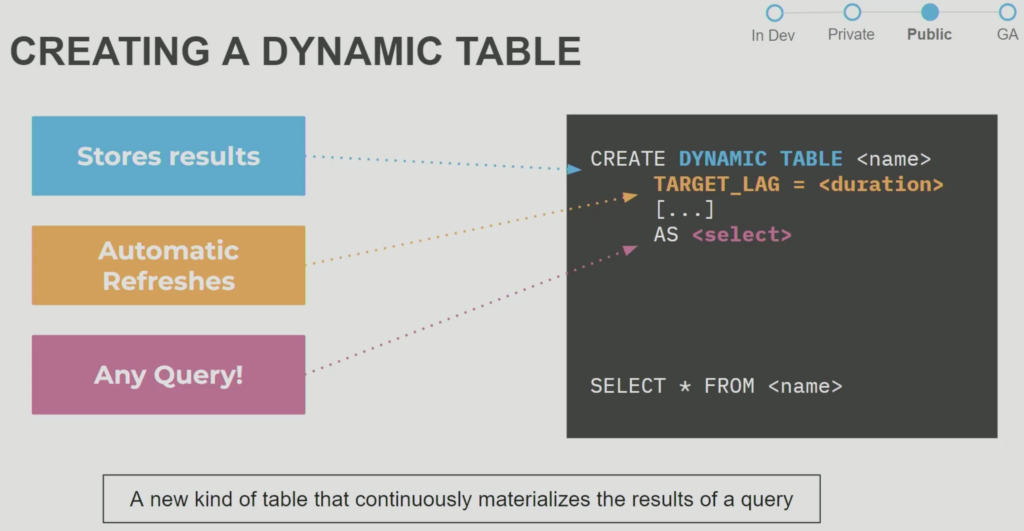

Dynamic Tables

Introducing Dynamic Tables, a new table type in Snowflake (now available in Public Preview, as announced during Snowflake Summit 2023). These tables utilize straightforward SQL statements as building blocks for declarative data transformation pipelines.

Dynamic Tables offer a dependable, cost-effective, and automated approach to data transformation, ensuring seamless data consumption. The scheduling and orchestration required for these transformations are efficiently managed by Snowflake, providing transparency and simplifying the overall process.

With Dynamic Tables, users can effortlessly leverage the power of SQL to create reliable and automated data pipelines within the Snowflake environment.

For more information, refer to Dynamic Tables.

Streamlit

Streamlit (in Private Preview on AWS)) , an open-source Python library, empowers users to develop interactive web applications for data science and machine learning endeavors. By offering a user-friendly framework, it streamlines the creation and deployment of custom web interfaces.

In addition to its existing capabilities, Streamlit now integrates with Snowflake, providing a seamless way to execute Streamlit code within the Snowflake environment. With this new feature, you can effortlessly deploy your Streamlit code onto Snowflake’s secure and reliable infrastructure, ensuring the application adheres to existing governance protocols.

Furthermore, you have the option to package your Streamlit application into a Snowflake Native Application, enabling external distribution to a broader audience. This integration combines the power of Streamlit with the robustness of Snowflake, offering a convenient and scalable solution for building and sharing interactive data science applications.

Snowflake Performance Index

Snowflake is committed to continuously enhancing performance and passing on the resulting savings to its customers. To provide increased transparency and metrics regarding performance improvements, Snowflake has introduced the Snowflake Performance Index (SPI).

This index showcases notable progress, indicating that query duration for stable customer workloads has improved by 15 percent over the past eight months, as tracked by Snowflake. These improvements highlight Snowflake’s relentless pursuit of optimizing price per performance, consistently delivering enhanced value to its customers.

Learn more about the Snowflake Performance Index.

Native Git integration

Snowflake now offers native integration with Git (in Private Preview), enabling users to effortlessly view, run, edit, and collaborate on Snowflake code stored in Git repositories. This integration brings a range of benefits, including seamless version control, streamlined CI/CD workflows, and enhanced testing controls for pipelines, machine learning models, and applications.

The Native Git integration enables you to easily deploy Streamlit, Snowpark, Native Apps, and SQL scripts directly into Snowflake, ensuring seamless synchronization with your Git repository. As a result, all the associated files appear as native files within the Snowflake environment. This unified approach allows for streamlined code deployment and management, facilitating efficient collaboration and ensuring that your code remains synchronized across both Snowflake and Git.

Mark your calendars for June 3-6, 2024

Snowflake Summit 2023 wrapped up with an exciting announcement: the upcoming Snowflake Summit 2024 will return to its founding roots in San Francisco, California.

Mark your calendars for June 3-6, 2024, and save the date for Snowflake Summit 2024, here.

In the following days we will go a little bit deeper into a few of these announcements and some of the benefits of being a Data Superhero.

Till next time.

Director Data & AI at Pong and Snowflake Data Superhero. Online better known as; DaAnalytics.