In a previous blogpost I showed how to load .csv-files into Snowflake. I downloaded these files manually from Kaggle. In this post I show you how to make use of the Kaggle API to remove the manual download part.

Install Kaggle

I have used Kaggle in a Anaconda environment. Therefore I have a separate environment in which I installed Kaggle.

Kaggle API key

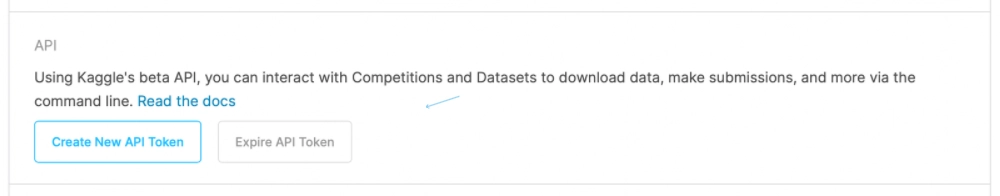

First we have to create a Kaggle API key which is necessary to connect to Kaggle. If you have a Kaggle account, you can create new API Token from you account settings (https://www.kaggle.com/<user_name>/account).

Standard implementation

Clicking the button above generates a kaggle.json-file. This file needs to be stored in a folder called .kaggle in your home directory. The kaggle.json-file has the following structure:

In the Python-script you can use the OS environment variables directly to authenticate, like presented below:

Customised example

For this example, I was curious whether I could include the kaggle.json content to the Credentials-file I used in my previous example.

Authentication in this customised example goes hand in hand with the authentication to Snowflake. The same Credentials-file is referenced for both Snowflake as well as Kagggle:

Download from Kaggle

Next step is downloading files from Kaggle. For this we reference the Kaggle API, specifically; the dataset_download_files() method

Unzip records

Data from Kaggle is downloaded in .zip-format. You can unzip the files from within the Kaggle API; ‘unzip=True’.

An alternative is to unzip the files via the statement below:

Continuing

The remainder of is similar to the previous post; From .csv to Snowflake.

- Reading .csv Data

- Creating Snowflake objects

- Loading Data into Snowflake

Find the code for this blogpost on Github.

Thanks for reading and till next time.